Gene editing of human embryos yields early results

Scientists have long sought a strategy for curing genetic diseases, but — with just a few notable exceptions — have succeeded only in their dreams. Now, though, researchers in China and Texas have taken a step toward making the fantasies a reality for all inherited diseases.

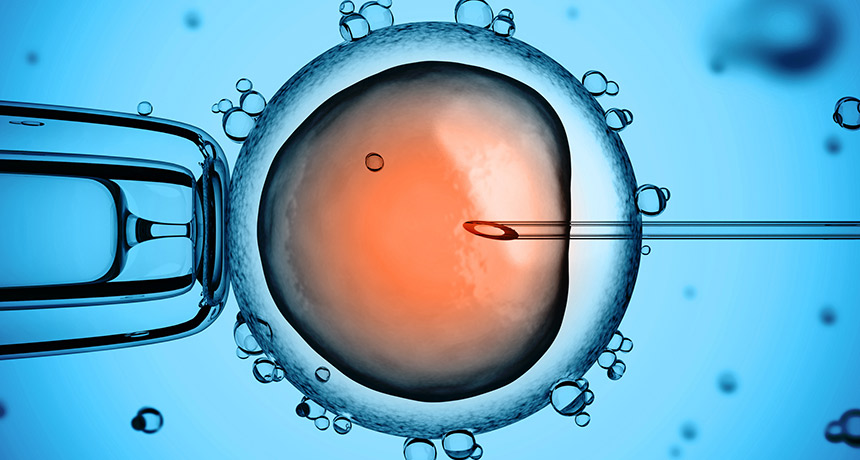

Using the gene-editing tool known as CRISPR/Cas9, the researchers have successfully edited disease-causing mutations out of viable human embryos. Other Chinese groups had previously reported editing human embryos that could not develop into a baby because they carried extra chromosomes, but this is the first report involving viable embryos (SN Online: 4/8/16; SN Online: 4/23/15).

In the new work, reported March 1 in Molecular Genetics and Genomics, Jianqiao Liu of Guangzhou Medical University in China and colleagues used embryos with a normal number of chromosomes. The embryos were created using eggs and sperm left over from in vitro fertilization treatments. In theory, the embryos could develop into a baby if implanted into a woman’s uterus.

Researchers in Sweden and England are also conducting gene-editing experiments on viable human embryos (SN: 10/29/16, p. 15), but those groups have not yet reported results.

Human germline editing wasn’t realistic until CRISPR/Cas9 and other new gene editors came along, says R. Alta Charo, a bioethicist at the University of Wisconsin Law School in Madison. “We’ve now gotten to the point where it’s possible to imagine a day when it would be safe enough” to be feasible. Charo was among the experts on a National Academies of Sciences and Medicine panel that in February issued an assessment of human gene editing. Altering human embryos, eggs, sperm or the cells that produce eggs and sperm would be permissible, provided there were no other alternatives and the experiments met other strict criteria, the panel concluded (SN: 3/18/17, p. 7).

Still, technical hurdles remain before CRISPR/Cas9 can cross into widespread use in treating patients.

CRISPR/Cas9 comes in two parts: a DNA-cutting enzyme called Cas9, and a “guide RNA” that directs Cas9 to cut at a specified location in DNA. Guide RNAs work a little like a GPS system, says David Edgell, a molecular biologist at Western University in London, Ontario. Given precise coordinates or a truly unique address, a good GPS should take you to the right place every time.

Scientists design guide RNAs so that they will carry Cas9 to only one stretch of about 20 bases (the information-carrying subunits of DNA) out of the entire 6 billion base pairs that make up the human genetic instruction book, or genome. But most 20-base locations in the human genome aren’t particularly distinctive. They are like Starbucks coffee shops: There are a lot of them and they are often similar enough that a GPS might get confused about which one you want to go to, says Edgell. Similarly, guide RNAs sometimes direct Cas9 to cut alternative, or “off-target,” sites that are a base or two different from the intended destination. Off-target cutting is a problem because such edits might damage or change genes in unexpected ways.

“It’s a major issue for sure,” says Bruce Korf, a geneticist at the University of Alabama at Birmingham and president of the American College of Medical Genetics and Genomics Foundation. Doctors trying to correct one genetic defect in a patient want to be sure they aren’t accidentally introducing another.

But CRISPR/Cas9’s propensity to cut undesired sites may be exaggerated, says Alasdair MacKenzie, a molecular biologist at the University of Aberdeen in Scotland. In experiments with mice, MacKenzie and colleagues limited how much Cas9 was produced in cells and made sure the enzyme didn’t stick around after it made an edit. No off-target cuts were detected in any of the mice resulting from successfully edited embryos, MacKenzie and colleagues reported in November in Neuropeptides.

Other researchers have experimented with assembling the Cas9 and guide RNAs outside of the cell and then putting the preassembled protein-RNA complex into cells. That’s the strategy the Chinese researchers took in the new human embryo–editing study. No off-target cuts were detected in that study either, although only one edited embryo was closely examined.

Other researchers have been tinkering with the genetic scissors to produce high-fidelity versions of Cas9 that are far less likely to cut at off-target sites in the first place.

When a guide RNA leads Cas9 to a site that isn’t a perfect match, the enzyme can latch onto DNA’s phosphate backbone and stabilize itself enough to make a cut, says Benjamin Kleinstiver, a biochemist in J. Keith Joung’s lab at Harvard Medical School. By tweaking Cas9, Kleinstiver and colleagues essentially eliminated the enzyme’s ability to hold on at off-target sites, without greatly harming its on-target cutting ability.

Regular versions of Cas9 cut between two and 25 off-target sites for seven guide RNAs the researchers tested. But the high-fidelity Cas9 worked nearly flawlessly for those guides. For instance, high-fidelity Cas9 reduced off-target cutting from 25 sites to just one for one of the guide RNAs, the researchers reported in January 2016 in Nature. That single stray snip, however, could be a problem if the technology were to be used in patients.

A group led by CRISPR/Cas9 pioneer Feng Zhang of the Broad Institute of MIT and Harvard tinkered with different parts of the Cas9 enzyme. That team also produced a cutter that rarely cleaved DNA at off-target sites, the team reported last year in Science.

Another problem for gene editing has been that it is good at disabling, or “knocking out,” genes that are causing a problem but not at replacing genes that have gone bad. Knocking out a gene is easy because all Cas9 has to do is cut the DNA. Cells generally respond by gluing the cut ends back together. But, like pieces of a broken vase, they rarely fit perfectly again. Small flaws introduced in the regluing can cause the problem gene to produce nonfunctional proteins. Knocking out genes may help fight Huntington’s disease and other genetic disorders caused by single, rogue versions of genes.

Many genetic diseases, such as cystic fibrosis or Tay-Sachs, are caused when people inherit two mutated, nonfunctional copies of the same gene. Knocking those genes out won’t help. Instead, researchers need to insert undamaged versions of the genes to restore health. Inserting a gene starts with cutting the DNA, but instead of gluing the cut ends together, cells use a matching piece of DNA as a template to repair the damage.

In the new human embryo work, Liu and colleagues, including Wei-Hua Wang of the Houston Fertility Institute in Texas, first tested this type of repair on embryos with an extra set of chromosomes. Efficiency was low; about 10 to 20 percent of embryos contained the desired edits. Researchers had previously argued that extra chromosomes could interfere with the editing process, so Liu’s group also made embryos with the normal two copies of each chromosome (one from the father and one from the mother). Sperm from men that have genetic diseases common in China were used to fertilize eggs. In one experiment, Liu’s group made 10 embryos, two of which carried a mutation in the G6PD gene. Mutations in that gene can lead to a type of anemia.

Then the team injected Cas9 protein already leashed to its guide RNA, along with a separate piece of DNA that embryos could use as a template for repairing the mutant gene. G6PD mutations were repaired in both embryos. Since both of the two embryos had the repair, the researchers say they achieved 100 percent efficiency. But one embryo was a mosaic: It carried the fix in some but not all of its cells. Another experiment to repair mutations in the HBB gene, linked to blood disorders, worked with 50 percent efficiency, but with some other technical glitches.

Scientists don’t know whether editing just some cells in an embryo will be enough to cure genetic diseases. For that reason, some researchers think it may be necessary to step back from embryos to edit the precursor cells that produce eggs and sperm, says Harvard University geneticist George Church. Precursor cells can produce many copies of themselves, so some could be tested to ensure that proper edits have been made with no off-target mutations. Properly edited cells would then be coaxed into forming sperm or eggs in lab dishes. Researchers have already succeeded in making viable sperm and eggs from reprogrammed mouse stem cells (SN: 11/12/16, p. 6). Precursors of human sperm and eggs have also been grown in lab dishes (SN Online: 12/24/14), but researchers have yet to report making viable human embryos from such cells.

The technology to reliably and safely edit human germline cells will probably require several more years of development, researchers say.

Germline editing — as altering embryos, eggs and sperm or their precursors is known — probably won’t be the first way CRISPR/Cas9 is used to tackle genetic diseases. Doctors are already planning experiments to edit genes in body cells of patients. Those experiments come with fewer ethical questions but have their own hurdles, researchers say.

“We still have a few years to go,” says MacKenzie, “but I’ve never been so hopeful as I am now of the capacity of this technology to change people’s lives.”